Bunnies are going to Broadcast Asia in Singapore! 🐰 - Let's dive into your future success with bunny.net🚀

If you’re hopping along with us, you might have heard that we just launched our Smart Preloader for Bunny Optimizer. Smart Preloader is our answer to annoying waiting times, and gives you a chance to bring your brand closer to your users.

Wanna know how we made it happen? For the curious, we wrote this blog post to tell you about the technical challenges we solved to bring you Smart Preloader.

Challenges and Goals

Sometimes, the origin can’t respond fast enough to serve content to a client. Preloader screens let users know that the website is on its way, so they don’t leave before it finishes loading.

If done poorly, a preloader screen can extend wait times and lead to even more frustration for the user. If we’re showing a preloader, the user’s already waiting for the website to load. We didn’t want to make that wait even longer. We needed a solution that would add as little overhead as possible.

To make sure we did it right, we had to:

- Keep overhead as low as possible

- Preserve the original request, instead of duplicating or modifying it

Going for Zero Overhead

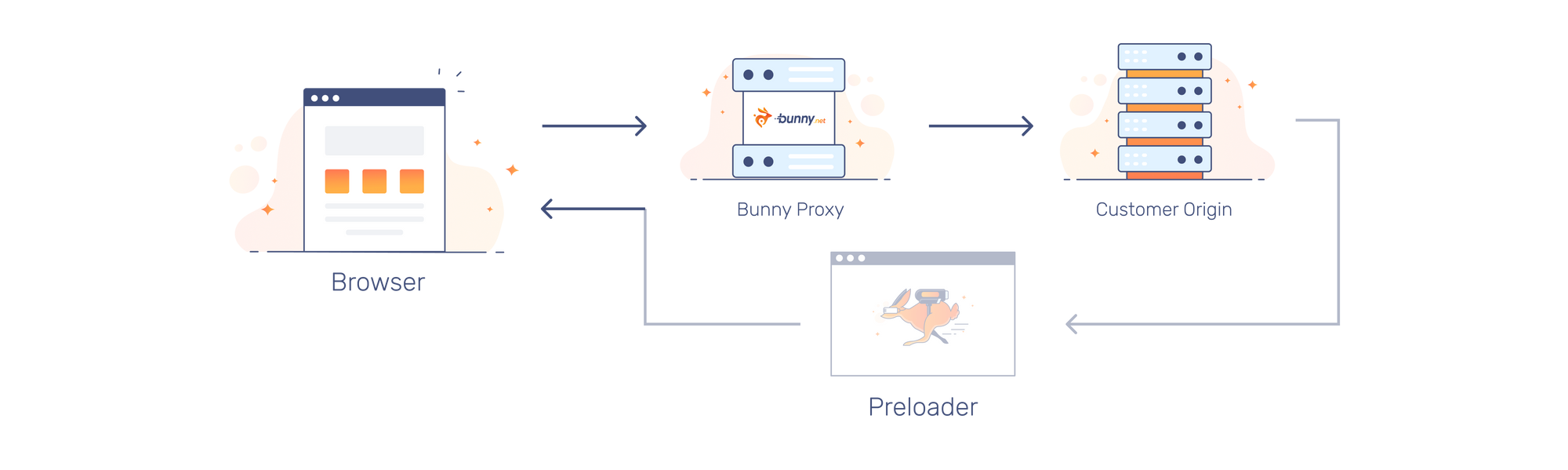

At bunny.net, every request goes through layers of infrastructure. Each layer has its own task to ensure data gets where it needs to. The last layer in a request’s lifecycle is our Bunny Proxy, a custom-built reverse proxy software that we designed to give us full control of the network stack.

When Smart Preloader is enabled, instead of modifying the request flow, Bunny Proxy immediately sends the request to the origin as with any other request.

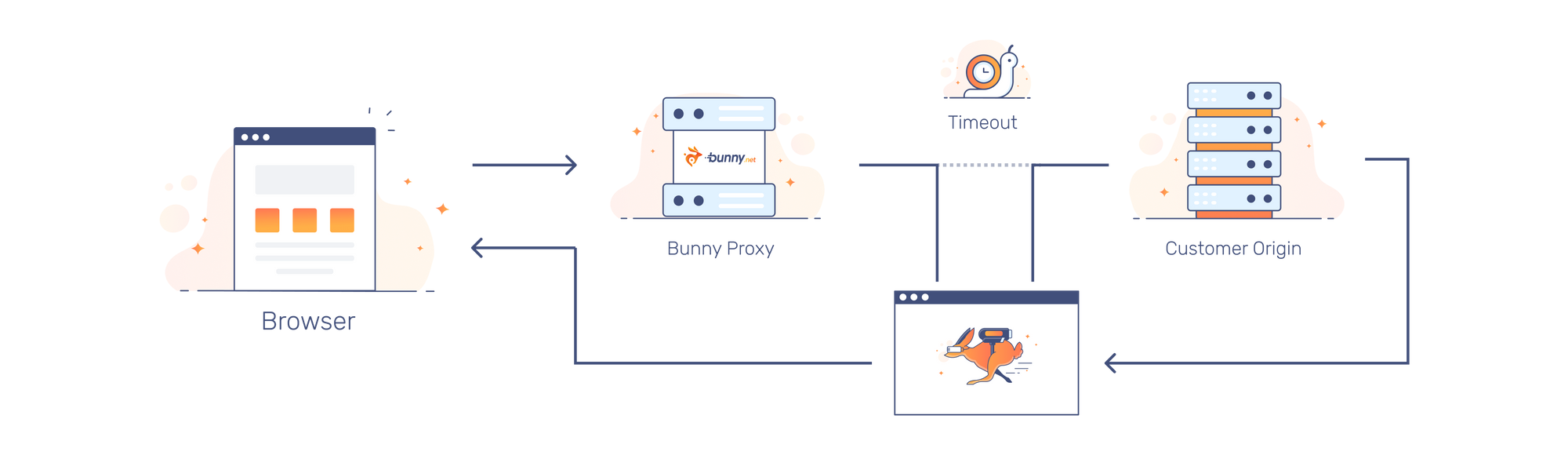

At the same time, Bunny Proxy starts a special cancellation token based on the configured timeout. The cancellation token is responsible for triggering the request disconnect. If the request finishes within the cancellation token timeout, Bunny Proxy returns the request to the client with no modifications. This ensures that bunny.net serves the request with no added delays.

If the origin doesn’t respond before the token timeout, the token triggers and Bunny Proxy disconnects the original request from the one sent to the origin and responds with the preloader screen instead.

At the same time, the original request is kept alive, and tagged with a code linking it to the preloader response. This lets the Smart Preloader screen render, then immediately reconnect back to the original request.

Preloader in Action

To show you how this works, we created an origin in Europe that responds with a 3-second delay, then requested it from Asia.

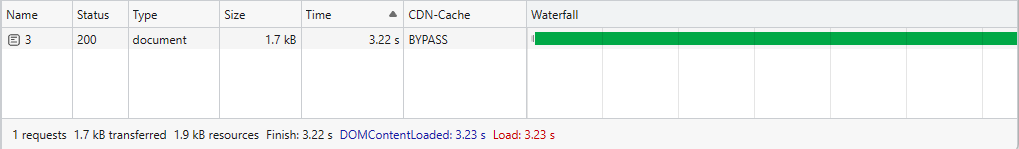

With Smart Preloader disabled, the browser shows a white screen for the whole wait until the page loads. The total time to start rendering the page without Smart preloader was 3.23 seconds.

The image below shows how this looks to the end user. The browser keeps a blank screen until the HTML finally loads at 3.23 seconds.

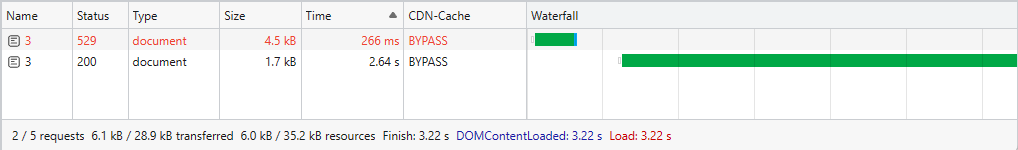

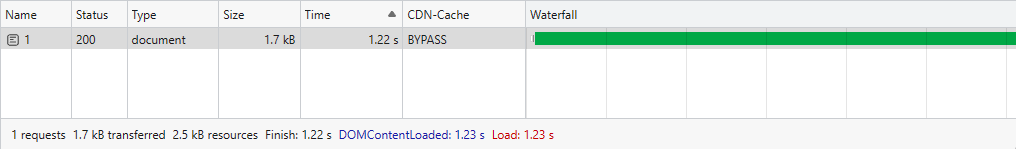

With Smart Preloader enabled, the request splits after the 266 ms mark. The user sees the preloader almost immediately, and the browser reconnects back to the original request after a short delay. The total time to start rendering the page is 3.22 seconds. (The time without smart preloader was actually a bit higher here, but it's within a normal margin of error.)

Once again, we include the visualized example for comparison. This time, the user is quickly greeted with a branded preloading screen, until the backend is finally able to reply and start rendering the HTML after 3.22 seconds.

So you can see that Smart Preloader keeps latency very close to zero. Since we only show the preloader screen when the origin is taking longer than usual, we can create a better experience for the end user.

Reconnecting to the Original Request

So how do we securely reconnect the browser back to the original request? We tested a few different ways:

- IP, User-Agent and URL combination

- IP, URL, and query string combination

- IP, URL, and cookie combination

While the first option was the cleanest, we tossed it out quickly. Because of Network Address Translation (NAT), it’s not uncommon for multiple users to appear as a single IP to the outside internet. Relying on IP could cause response mix-ups.

The second option used a query string parameter that would appear at the end of each URL after the preloader was refreshed. While this seemed great for user privacy, this method would have caused unwanted URL changes and redirects. It could cause problems if the user copies and pastes the URL to share with someone else, for example.

Lastly, we tried the cookie-based version. We initially avoided this to avoid privacy misconceptions, but it ended up being the cleanest, least-obtrusive way to reconnect requests. When the preloader screen is returned, a small, short-lived cookie is also included in the response.

The cookie is sent back to the server, which tells Bunny Proxy to find the original HTTP request sent to the origin and attach the new request back.

This method introduced almost no overhead and let Bunny Proxy handle the request just like it would handle any other.

Close to Zero Overhead

Of course, absolute zero overhead isn’t always possible, but we came as close to it as we possibly could. Our smart request-handling design ensures that most requests won't introduce any additional latency.

The preloader screen only shows up when requests are already noticeably delayed. If configured correctly, Smart Preloader only answers requests with slower response times than average, with a high chance that the short overlap during reconnection will not interfere with the load times.

Even when the origin replies immediately after the preloader is shown, our global edge network keeps overhead as close to zero as possible.

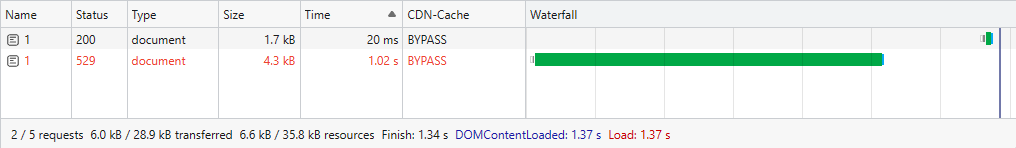

In this example, the response arrives as the preloader is being shown:

We consider this as the most extreme example. The result was a roughly 150ms increase in load time, mainly caused by the short delay in the preloader code, that makes sure the browser loads some assets before triggering a refresh.

Because our edge network's average global latency is under 26 ms, this allows us to introduce a close to zero overhead system in a simple, and seamless integration for any website.

And that’s how we made a preloader that introduces near zero overhead and improves the user experience.

Want to Help us Hop Faster?

At bunny.net, we're on a mission to make the internet hop faster. We operate one of the fastest global networks and improve the internet experience for over 1 million websites. We're obsessed with innovation and making the internet a better place. If you connect with our mission and enjoyed this blog post, make sure to check out our careers page and join the fluffle!

We're working on many exciting new projects, and we would love to get your help.